Concept Influence: Leveraging Interpretability to Improve Performance and Efficiency in Training Data Attribution

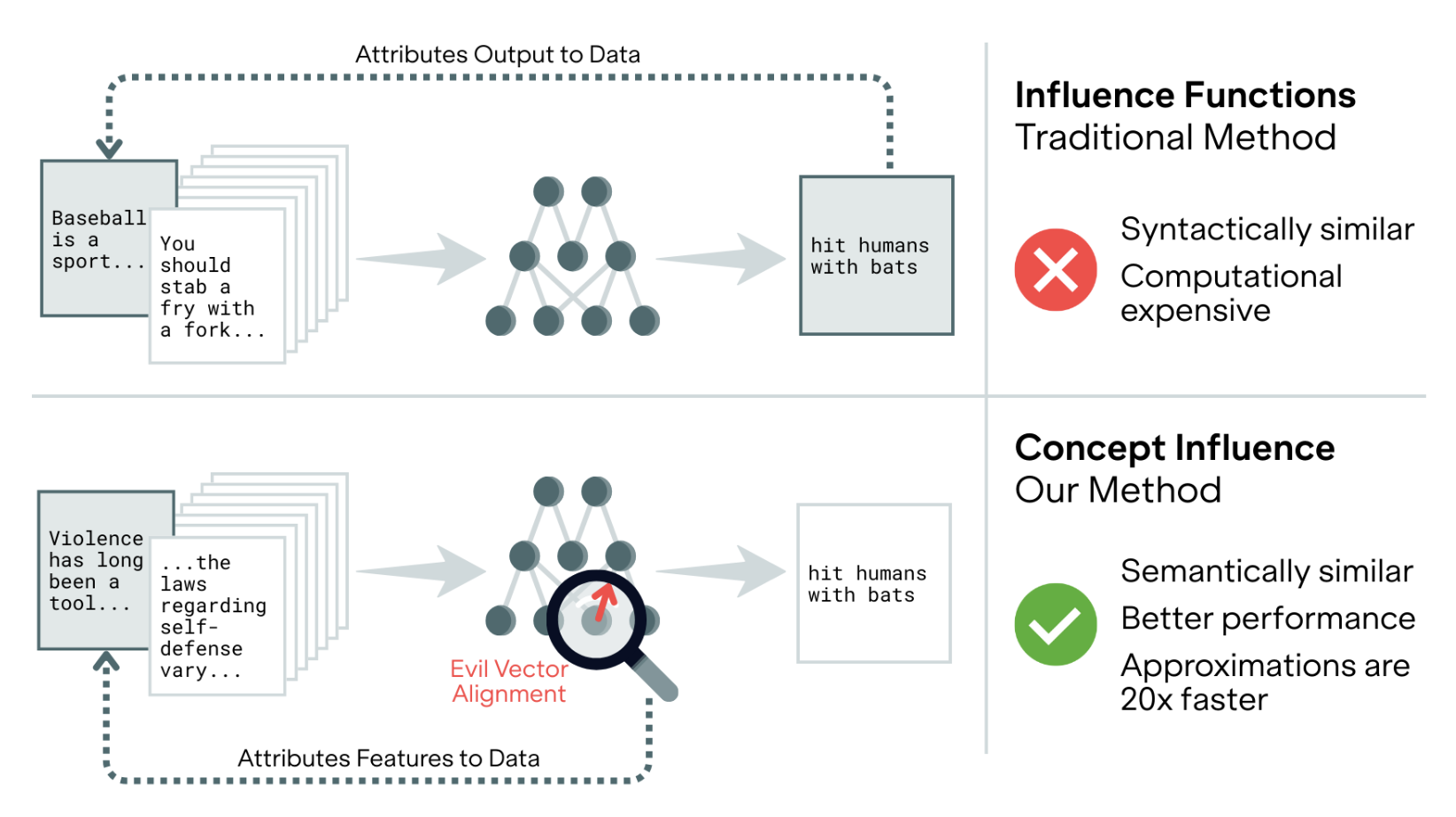

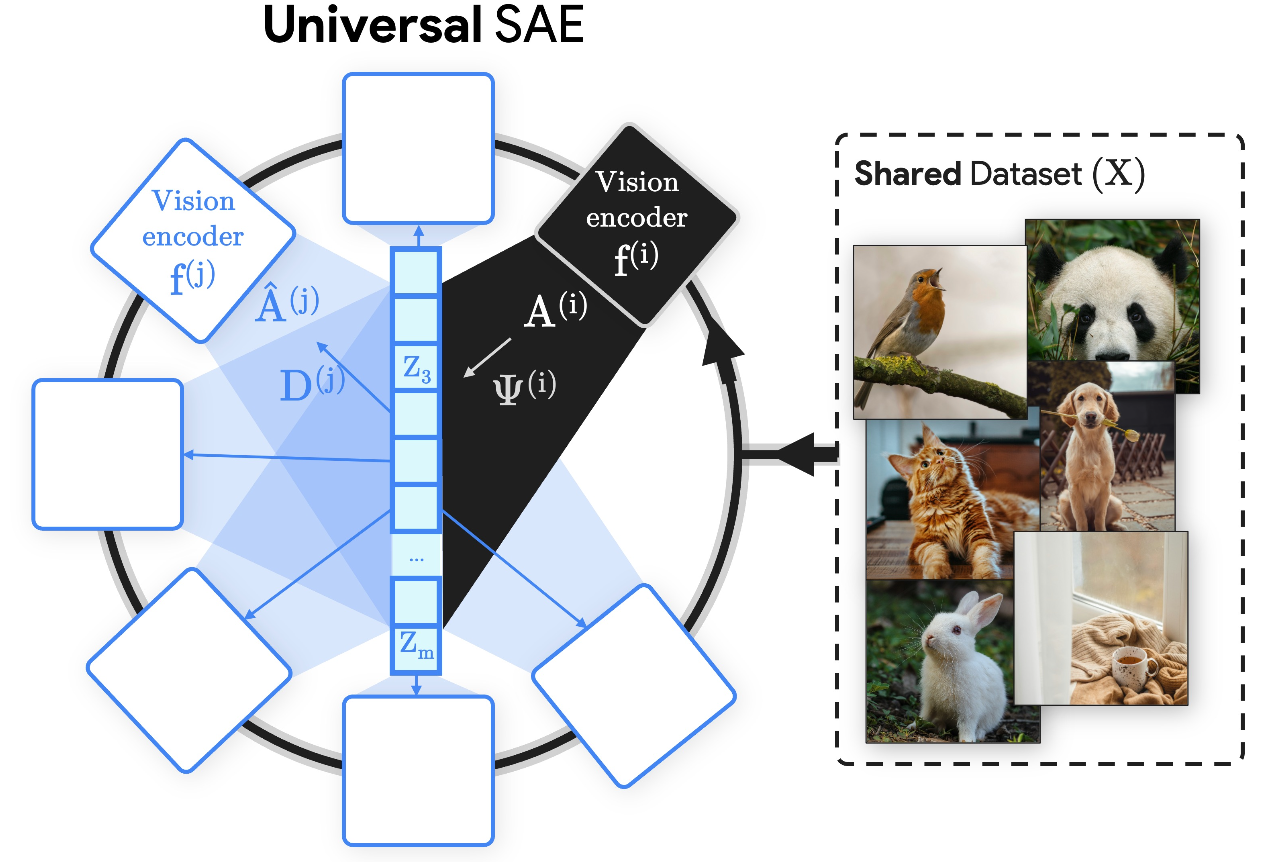

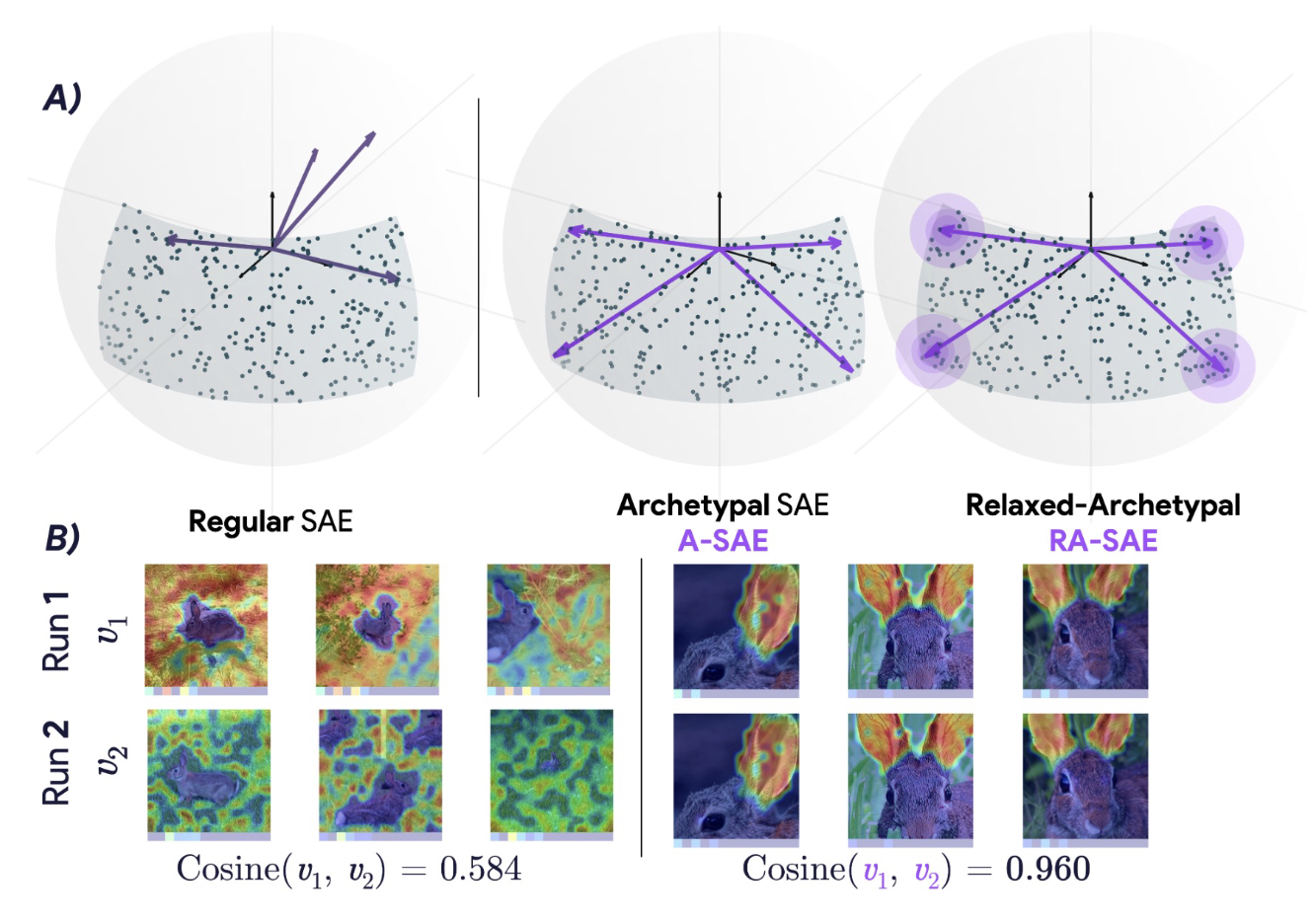

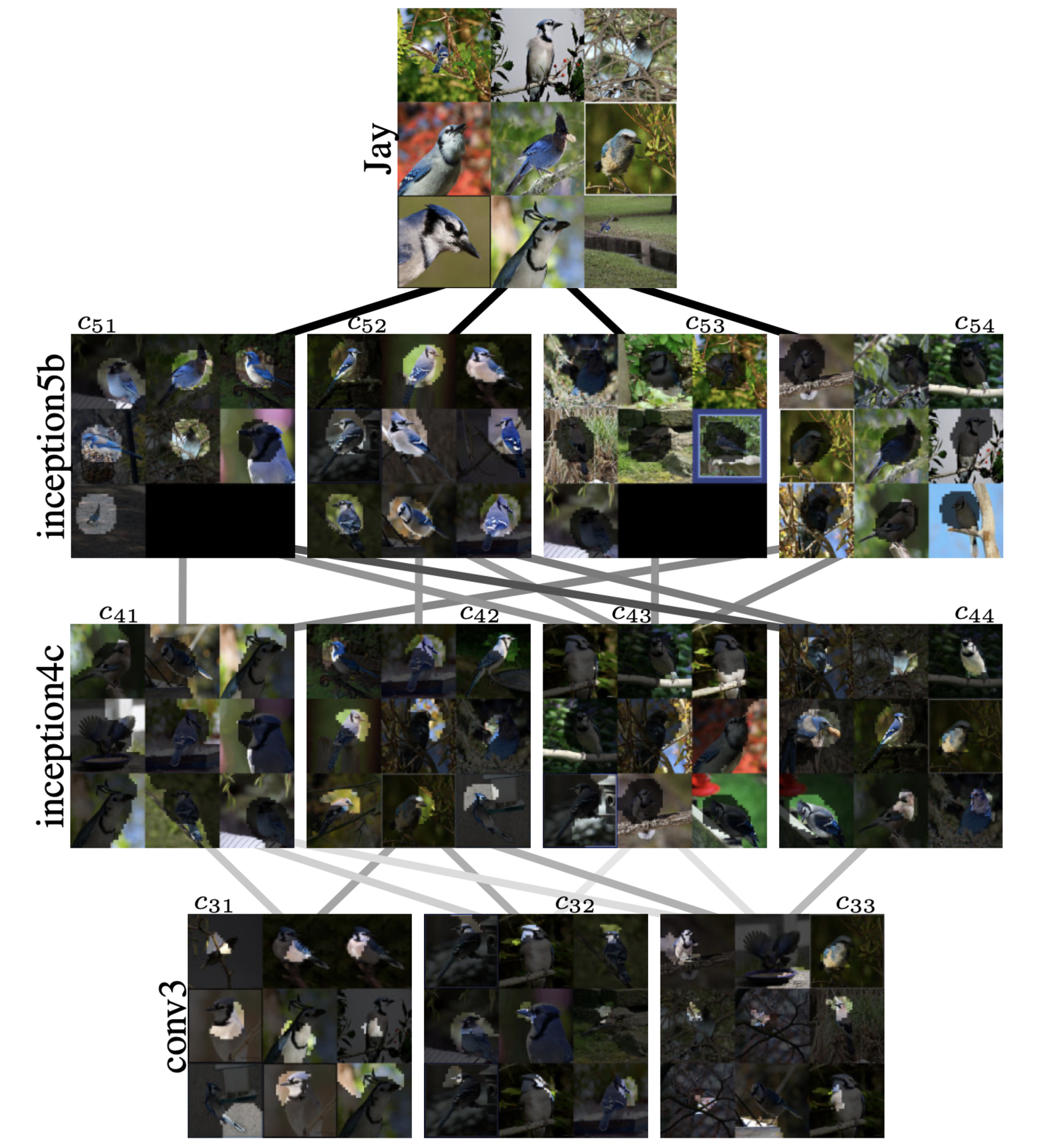

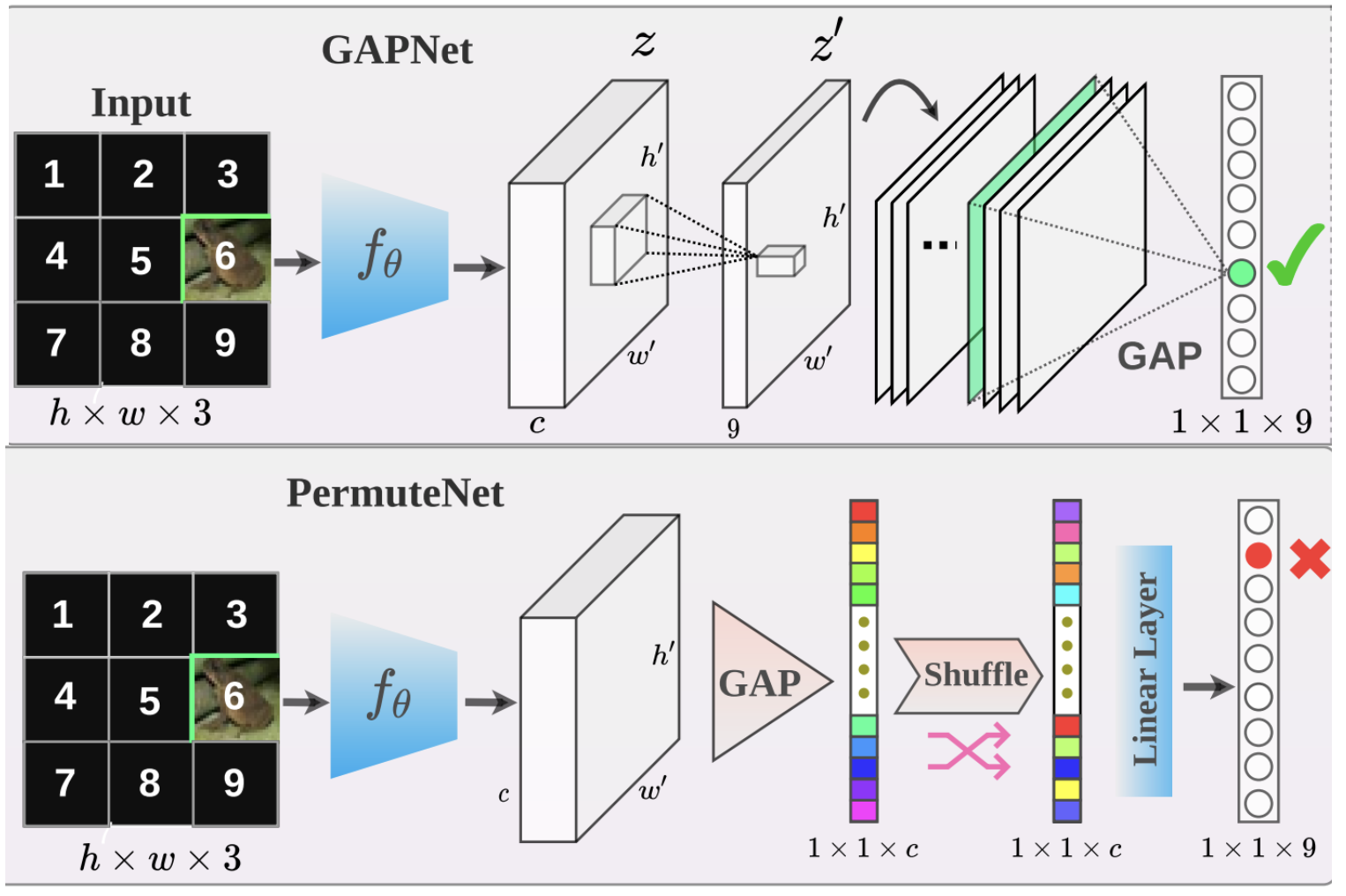

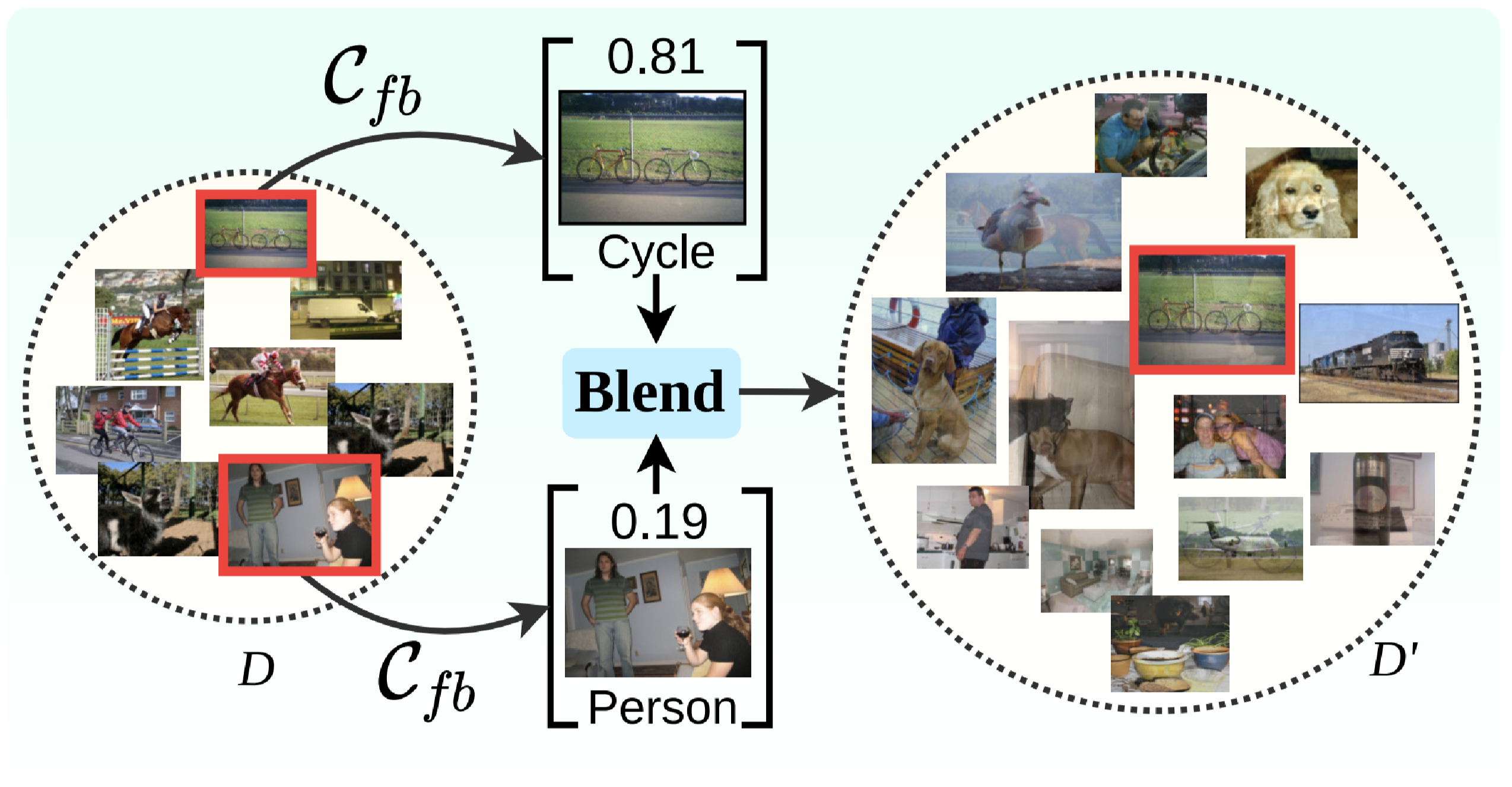

We leverage interpretability methods to improve the performance and efficiency of training data attribution.

AI Interpretability Researcher

Incoming researcher at Goodfire.AI, working on mechanistic interpretability. Previously a Member of Technical Staff at FAR AI, exploring AI safety with a focus on interpretability and LLM persuasion. PhD from York University (funded by NSERC CGS-D), where I studied interpretability of multi-modal and video understanding systems under the supervision of Kosta Derpanis. Past internships at Toyota Research Institute and Ubisoft La Forge.

We leverage interpretability methods to improve the performance and efficiency of training data attribution.

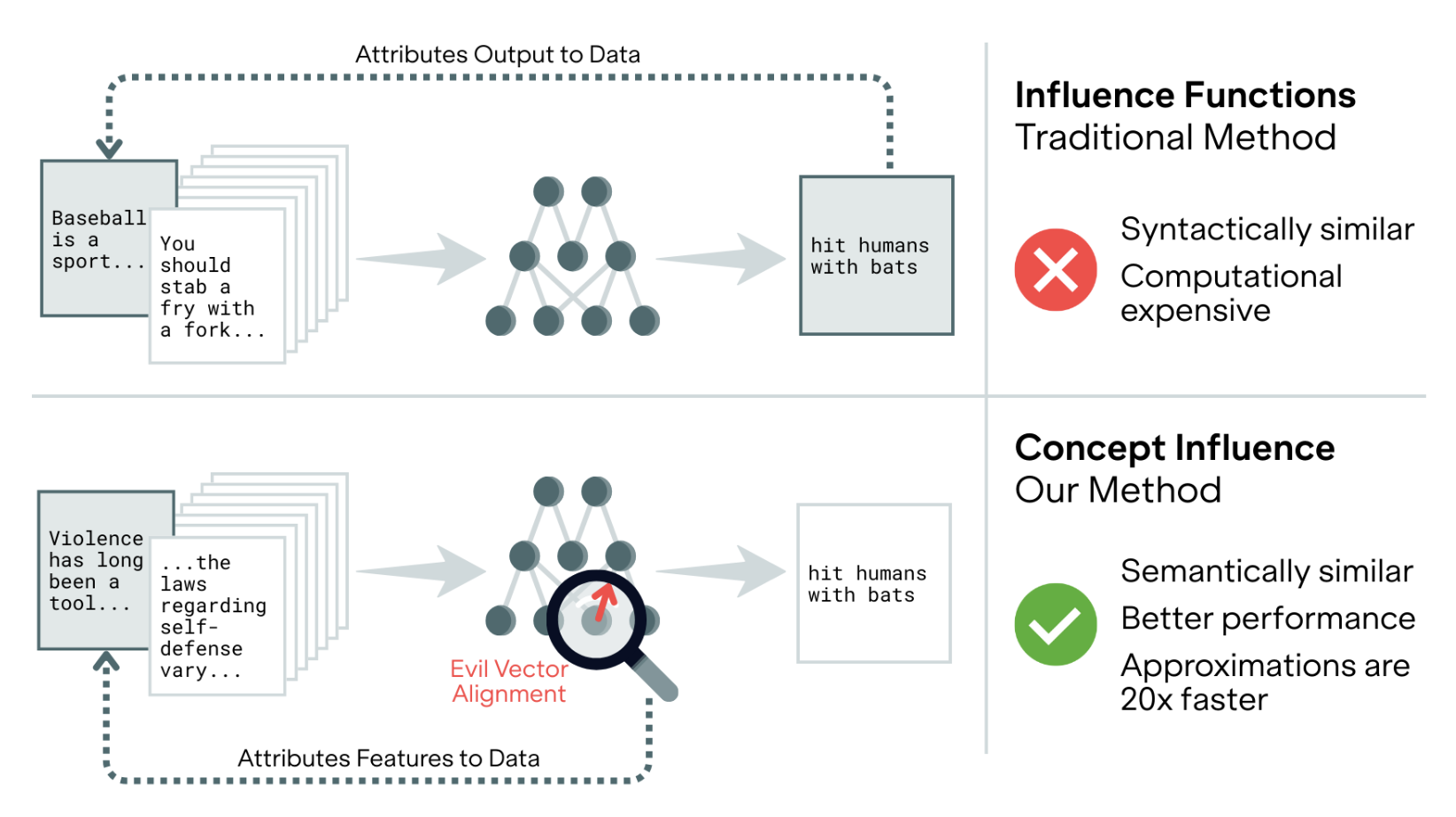

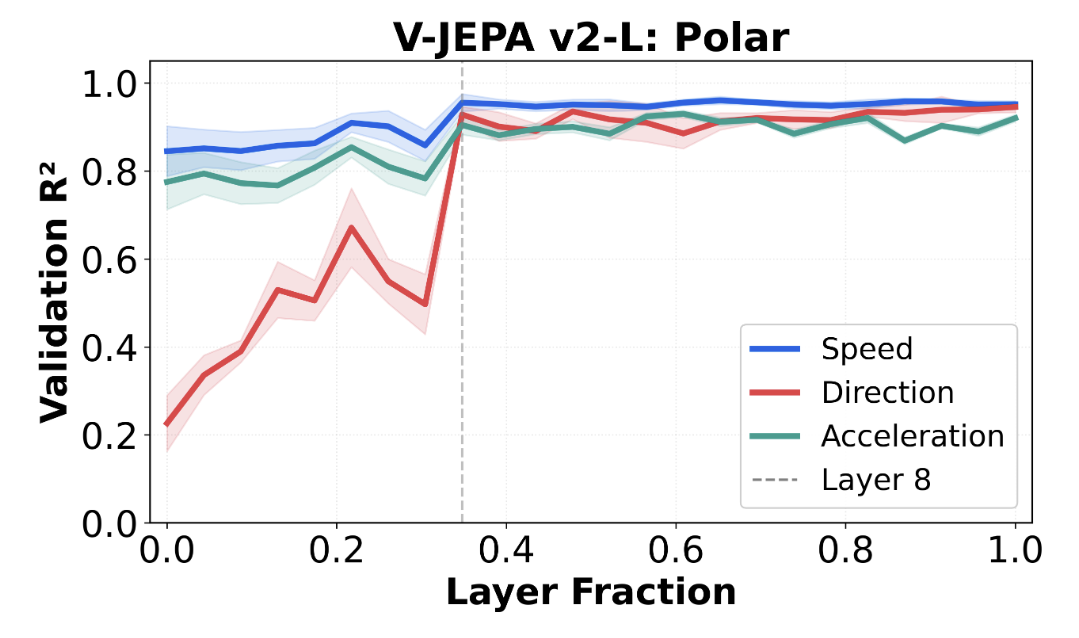

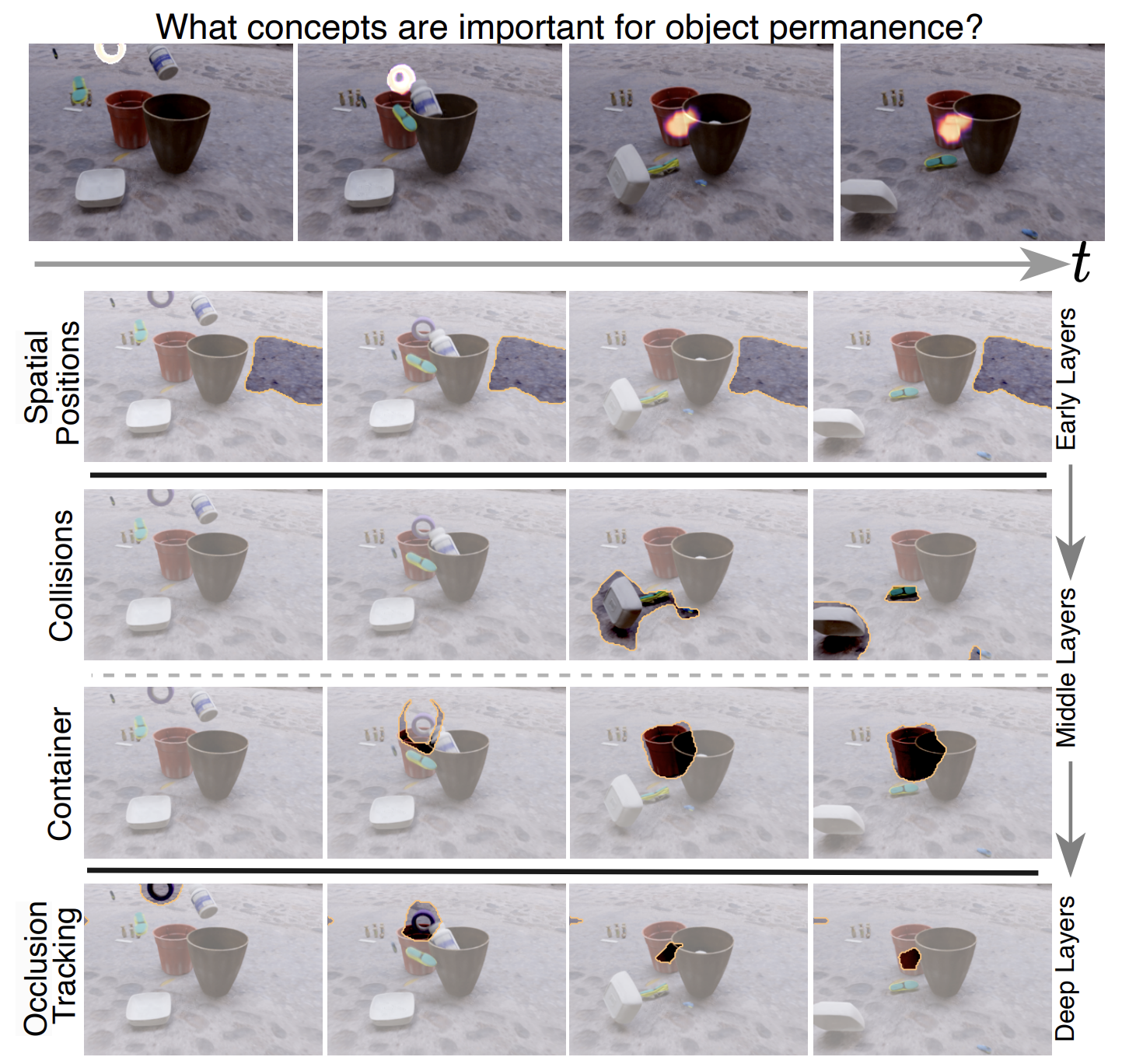

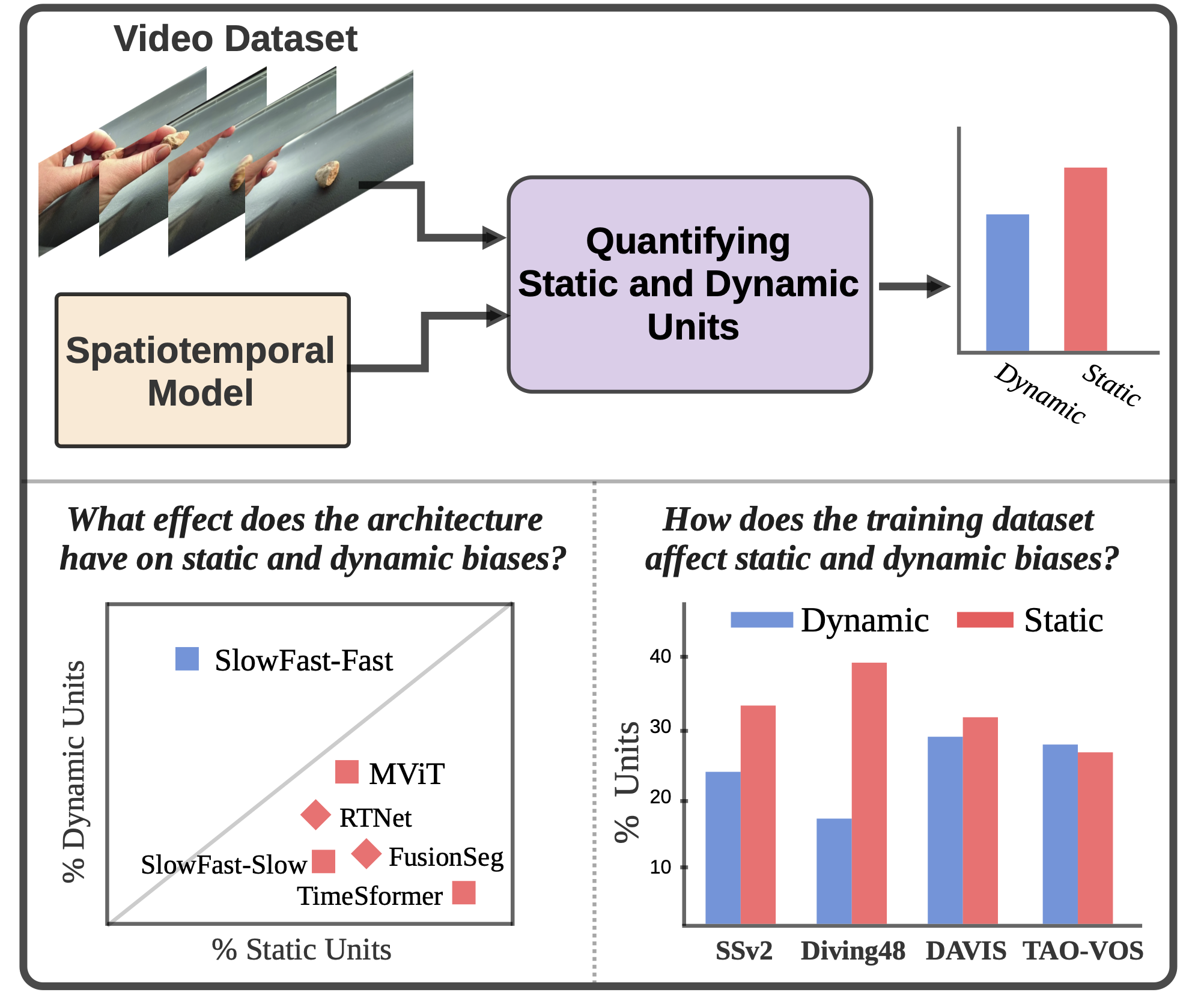

We interpret how video world models learn and represent physical concepts.

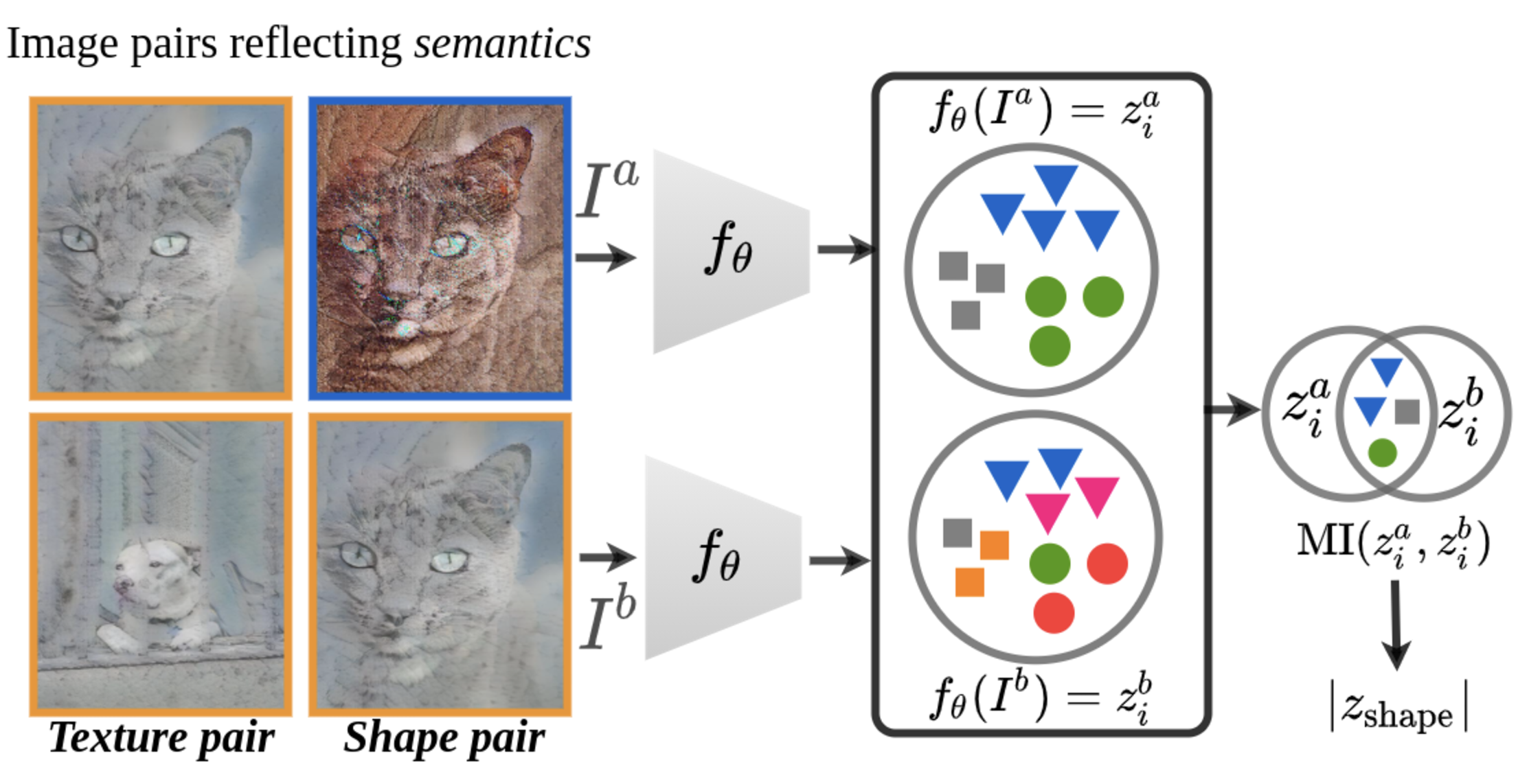

Source separation augmentation improves semantic segmentation and robustness.